This invention we give away for free to someone who wants to build a AI assistant startup:

(Read the warning in the end of this post)

It should work to build a interface for telepathy/ silent communication with a AI assistant in a smartphone with a neurophone sensor: https://youtu.be/U_QxkirKW74

My suggestion is to use the sensor for Touch ID for communication with the AI. When you touch the sensor you hear the assistant through your skin: https://www.lifewire.com/sensors-that-make-iphone-so-cool-2…

And a interface based on this information for speaking with the assistant: The Audeo is a sensor/device which detects activity in the larynx (aka. voice box) through EEG (Electroencephalography). The Audeo is unique in it's use of EEG in that it is detecting & analyzing signals outside the brain on their path to the larynx.1 The neurological signals/data are then encrypted and then transmitted to a computer to be processed using their software (which can be seen being used in Kimberly Beals' video).2 Once it is analyzed and processed the data can then be represented using a computer speech generator.

Possibilities

The Audeo is a great sensor/device to detect imagined speech. It has an infinite amount of uses, especially in our areas of study. Here are some videos that show what the Audeo can be used for: http://nerve.boards.net/…/79/audeo-ambient-using-voice-input In a $6.3 million Army initiative to invent devices for telepathic communication, Gerwin Schalk, underwritten in a $2.2 million grant, found that it is possible to use ECoG https://en.m.wikipedia.org/wiki/Electrocorticography signals to discriminate the vowels and consonants embedded in spoken and in imagined words. http://m.phys.org/…/2008-08-scientists-synthetic-telepathy.…

The results shed light on the distinct mechanisms associated with production of vowels and consonants, and could provide the basis for brain-based communication using imagined speech. https://books.google.se/books…

http://scholar.google.se/scholar… Research into synthetic telepathy using subvocalization https://en.m.wikipedia.org/wiki/Subvocalization is taking place at the University of California, Irvine under lead scientist Mike D'Zmura. The first such communication took place in the 1960s using EEG to create Morse code using brain alpha waves.

https://en.m.wikipedia.org/wiki/Subvocal_recognition

https://en.m.wikipedia.org/wiki/Throat_microphone

https://en.m.wikipedia.org/wiki/Silent_speech_interface

Why do Magnus Olsson and Leo Angelsleva

give you this opportunity for free?

Because Facebook can use you and your data in research for free and I think someone else than Mark Zuckerberg should get this opportunity: https://m.huffpost.com/us/entry/5551965

Neurotechnology, Elon Musk and the goal of human enhancement

At the World Government Summit in Dubai in February, Tesla and SpaceX chief executive Elon Musk said that people would need to become cyborgs to be relevant in an artificial intelligence age. He said that a “merger of biological intelligence and machine intelligence” would be necessary to ensure we stay economically valuable.

Soon afterwards, the serial entrepreneur created Neuralink, with the intention of connecting computers directly to human brains. He wants to do this using “neural lace” technology – implanting tiny electrodes into the brain for direct computing capabilities.

Brain-computer interfaces (BCI) aren’t a new idea. Various forms of BCI are already available, from ones that sit on top of your head and measure brain signals to devices that are implanted into your brain tissue.

They are mainly one-directional, with the most common uses enabling motor control and communication tools for people with brain injuries. In March, a man who was paralysed from below the neck moved his hand using the power of concentration.

Cognitive enhancement

But Musk’s plans go beyond this: he wants to use BCIs in a bi-directional capacity, so that plugging in could make us smarter, improve our memory, help with decision-making and eventually provide an extension of the human mind.

“Musk’s goals of cognitive enhancement relate to healthy or able-bodied subjects, because he is afraid of AI and that computers will ultimately become more intelligent than the humans who made the computers,” explains BCI expert Professor Pedram Mohseni of Case Western Reserve University, Ohio, who sold the rights to the name Neuralink to Musk.

He wants to directly tap into the brain, effectively bypassing mechanisms such as speaking or texting. Musk has the credibility to talk about these things

Pedram Mohseni

“He wants to directly tap into the brain to read out thoughts, effectively bypassing low-bandwidth mechanisms such as speaking or texting to convey the thoughts. This is pie-in-the-sky stuff, but Musk has the credibility to talk about these things,” he adds.

Musk is not alone in believing that “neurotechnology” could be the next big thing. Silicon Valley is abuzz with similar projects. Bryan Johnson, for example, has also been testing “neural lace”. He founded Kernel, a startup to enhance human intelligence by developing brain implants linking people’s thoughts to computers.

In 2015, Facebook CEO Mark Zuckerberg said that people will one day be able to share “full sensory and emotional experiences” online – not just photos and videos. Facebook has been hiring neuroscientists for an undisclosed project at its secretive hardware division, Building 8.

However, it is unlikely this technology will be available anytime soon, and some of the more ambitious projects may be unrealistic, according to Mohseni.

Pie-in-the-sky

“In my opinion, we are at least 10 to 15 years away from the cognitive enhancement goals in healthy, able-bodied subjects. It certainly appears to be, from the more immediate goals of Neuralink, that the neurotechnology focus will continue to be on patients with various neurological injuries or diseases,” he says.

Mohseni says one of the best current examples of cognitive enhancement is the work of Professor Ted Berger, of the University of Southern California, who has been working on a memory prosthesis to replace the damaged parts of the hippocampus in patients who have lost their memory due to, for example, Alzheimer’s disease.

“In this case, a computer is to be implanted in the brain that acts similaly to the biological hippocampus from an input and output perspective,” he says. “Berger has results from both rodents and non-human primate models, as well as preliminary results in several human subjects.”

Mohseni adds: “The [US government’s] Defense Advanced Research Projects Agency (DARPA) currently has a programme that aims to do cognitive enhancement in their soldiers – ie enhance learning of a wide range of cognitive skills, through various mechanisms of peripheral nerve stimulation that facilitate and encourage neural plasticity in the brain. This would be another example of cognitive enhancement in able-bodied subjects, but it is quite pie-in-the-sky, which is exactly how DARPA operates.”

Understanding the brain

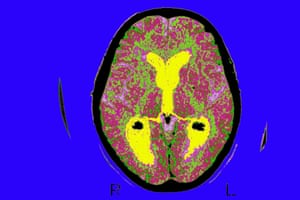

In the UK, research is ongoing. Davide Valeriani, senior research officer at University of Essex’s BCI-NE Lab, is using an electroencephalogram (EEG)-based BCI to tap into the unconscious minds of people as they make decisions.

BCIs could be a fundamental tool for going beyond human limits, hence improving everyone’s life

Davide Valeriani

“Everyone who makes decisions wears the EEG cap, which is part of a BCI, a tool to help measure EEG activity ... it measures electrical activity to gather patterns associated with confident or non-confident decisions,” says Valeriani. “We train the BCI – the computer basically – by asking people to make decisions without knowing the answer and then tell the machine, ‘Look, in this case we know the decision made by the user is correct, so associate those patterns to confident decisions’ – as we know that confidence is related to probability of being correct. So during training the machine knows which answers were correct and which one were not. The user doesn’t know all the time.”

Valeriani adds: “I hope more resources will be put into supporting this very promising area of research. BCIs are not only an invaluable tool for people with disabilities, but they could be a fundamental tool for going beyond human limits, hence improving everyone’s life.”

He notes, however, that one of the biggest challenges with this technology is that first we need to better understand how the human brain works before deciding where and how to apply BCI. “This is why many agencies have been investing in basic neuroscience research – for example, the Brain initiative in the US and the Human Brain Project in the EU.”

Whenever there is talk of enhancing humans, moral questions remain – particularly around where the human ends and the machine begins. “In my opinion, one way to overcome these ethical concerns is to let humans decide whether they want to use a BCI to augment their capabilities,” Valeriani says.

“Neuroethicists are working to give advice to policymakers about what should be regulated. I am quite confident that, in the future, we will be more open to the possibility of using BCIs if such systems provide a clear and tangible advantage to our lives.”

Facebook is building brain-computer interfaces

[facebook url="https://www.facebook.com/zuck/videos/vb.4/" /]

The plan is to eventually build non-implanted devices that can ship at scale. And to tamp down on the inevitable fear this research will inspire, Facebook tells me “This isn’t about decoding random thoughts. This is about decoding the words you’ve already decided to share by sending them to the speech center of your brain.” Facebook likened it to how you take lots of photos but only share some of them. Even with its device, Facebook says you’ll be able to think freely but only turn some thoughts into text.

Skin-Hearing

Meanwhile, Building 8 is working on a way for humans to hear through their skin. It’s been building prototypes of hardware and software that let your skin mimic the cochlea in your ear that translates sound into specific frequencies for your brain. This technology could let deaf people essentially “hear” by bypassing their ears.

A team of Facebook engineers was shown experimenting with hearing through skin using a system of actuators tuned to 16 frequency bands. A test subject was able to develop a vocabulary of nine words they could hear through their skin.

To underscore the gravity of Building 8s mind-reading technology, Dugan started her talk by saying she’s never seen something as powerful as the smartphone “that didn’t have unintended consequences.” She mentioned that we’d all be better off if we looked up from our phones every so often. But at the same time, she believes technology can foster empathy, education and global community.

Building 8’s Big Reveal

Facebook hired Dugan last year to lead its secretive new Building 8 research lab. She had previously run Google’s Advanced Technology And Products division, and was formerly a head of DARPA.

Facebook built a special Area 404 wing of its Menlo Park headquarters with tons of mechanical engineering equipment to help Dugan’s team quickly prototype new hardware. In December, it signed rapid collaboration deals with Stanford, Harvard, MIT and more to get academia’s assistance.

Yet until now, nobody really knew what Building 8 was…building. Business Insider had reported on Building 8’s job listings and that it might show off news at F8.

According to these job listings, Facebook is looking for a Brain-Computer Interface Engineer “who will be responsible for working on a 2-year B8 project focused on developing advanced BCI technologies.” Responsibilities include “Application of machine learning methods, including encoding and decoding models, to neuroimaging and electrophysiological data.” It’s also looking for a Neural Imaging Engineer who will be “focused on developing novel non-invasive neuroimaging technologies” who will “Design and evaluate novel neural imaging methods based on optical, RF, ultrasound, or other entirely non-invasive approaches.”

Elon Musk has been developing his own startup called Neuralink for creating brain interfaces.

Facebook Building 8 R&D division head Regina Dugan

Facebook has built hardware before to mixed success. It made an Android phone with HTC called the First to host its Facebook Home operating system. That flopped. Since then, Facebook proper has turned its attention away from consumer gadgetry and toward connectivity. It’s built the Terragraph Wi-Fi nodes, Project ARIES antenna, Aquila solar-powered drone and its own connectivity-beaming satellite from its internet access initiative — though that blew up on the launch pad when the SpaceX vehicle carrying it exploded.

Facebook has built and open sourced its Surround 360 camera. As for back-end infrastructure, it’s developed an open-rack network switch called Wedge, the Open Vault for storage, plus sensors for the Telecom Infra Project’s OpenCellular platform. And finally, through its acquisition of Oculus, Facebook has built wired and mobile virtual reality headsets.

Facebook’s Area 404 hardware lab contains tons of mechanical engineering and prototyping equipment

But as Facebook grows, it has the resources and talent to try new approaches in hardware. With over 1.8 billion users connected to just its main Facebook app, the company has a massive funnel of potential guinea pigs for its experiments.

Today’s announcements are naturally unsettling. Hearing about a tiny startup developing these advanced technologies might have conjured images of governments or coporate conglomerates one day reading our mind to detect thought crime, like in 1984. Facebook’s scale makes that future feel more plausible, no matter how much Zuckerberg and Dugan try to position the company as benevolent and compassionate. The more Facebook can do to institute safe-guards, independent monitoring, and transparency around how brain-interface technology is built and tested, the more receptive it might find the public.

A week ago Facebook was being criticized as nothing but a Snapchat copycat that had stopped innovating. Today’s demos seemed design to dismantle that argument and keep top engineering talent knocking on its door.

“Do you want to work for the company who pioneered putting augmented reality dog ears on teens, or the one that pioneered typing with telepathy?” You don’t have to say anything. For Facebook, thinking might be enough.

The MOST IMPORTANT QUESTIONS!

There is no established legal protection for the human subject when researchers use Brain Machine Interface (cybernetic technology) to reverse engineer the human brain.

The progressing neuroscience using brain-machine-interface will enable those in power to push the human mind wide open for inspection.

There is call for alarm. What kind of privacy safeguard is needed, computers can read your thoughts!

In recent decades areas of research involving nanotechnology, information technology, biotechnology and neuroscience have emerged, resulting in, products and services.

We are facing an era of synthetic telepathy, with brain-computer-interface and communication technology based on thoughts, not speech.

An appropriate albeit alarming question is: “Do you accept being enmeshed in a computer network and turned into a multimedia module”? authorities will be able to collect information directly from your brain, without your consent.

This kind of research in bioelectronics has been progressing for half a century.